ABOUT THE GAME

What is Shooter: Unknown?

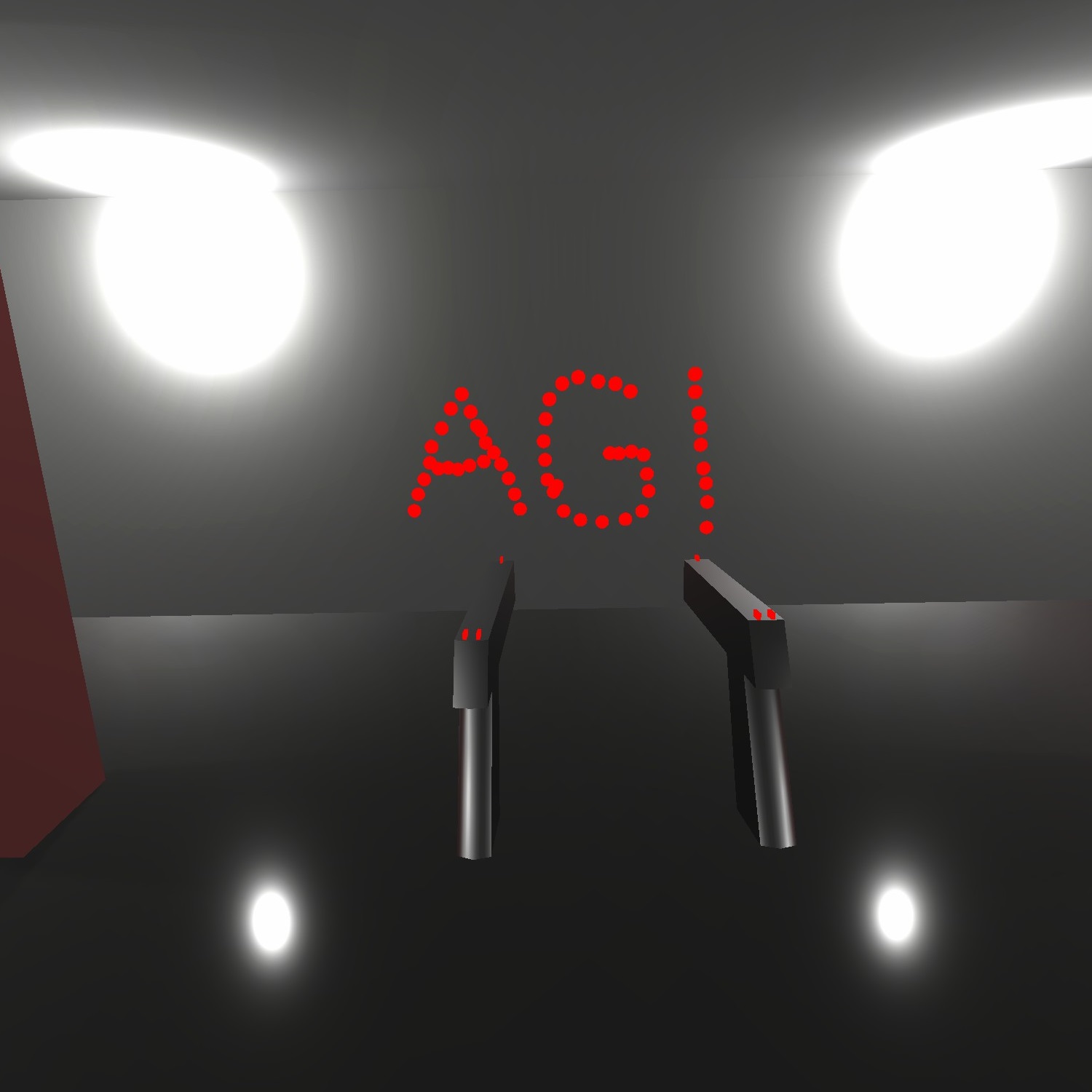

Shooter: Unknown is the result of a project in the advanced graphics and interaction course DH2513 at KTH. Spanning from late November 2022 to early January 2023, the project is centered around an action packed shooter game. The game is centered around an asymmetric cooperative experience in which one player, using a VR headset, attempts to clear a level of enemies in intense shootouts while the second player (or players) can aid the VR player by doing various gestures with their body in front of a gesture tracking camera. These gestures are then translated to various buffs or helpful effects bestowed upon the VR shooter player by a helpful drone following them around in the scene.

Asymmetrical gameplay

Shooter: Unknown features two highly distinct gameplay styles. One player interacts with the virtual level through VR where they are shooting various enemies who are also shooting back at them. Interaction takes place through the VR headset itself, as well as through the controllers which are used to shoot the akimbo pistols held by the player as well as teleporting around the level. The second player (or players) interact with the virtual scene through gestures tracked by a camera and can view the scene through the eyes of a small drone following the VR player. Through this drone, the player (players) in front of the camera can bestow various buffs upon the VR player and is also able to rotate around the VR player by doing various gestures with their body. Leaning to the left, for example, will make the drone circle to the left around the player.

Goals and Motivations

When setting out on the second project, it is after we had completed a fantasy and magic themed game called light in the dark, with elements of magic and monsters and a medieval art style. We wanted to try and make a new game that was totally different and more closely matched to the modern world, which would serve as a medium for some of the higher quality and more immersive modeling and graphics. This is the goal that we all wanted to improve and challenge in the second project period. We want to create a realistic gameplay experience with high quality graphics and combine this with project 2 requirement: Involving viewers to freely and easily interact with the game and bring fun to them. We also intent to upgrade our ability and explore the new technology etc. motion capture and AR, making a way that virtual and real world can connect each other.

Challenges and Obstacles

During the project, the group also ran into a number of obstacles. Mainly these issues arose in relation to

optimization, as the group often

strived to achieve realistic-looking graphics, the performance of the game would sometimes take a hit. One

example of this is the VR player view

which we wanted to look as realistic as possible. However, that would require a lot of computing power which

meant that we had to move the Audience

view to a separate computer which necessitated us doing networking.

Throughout the project, we also had a lot of issues with networking. A lot of it stemmed from using “Unity

Netcode for GameObjects” as we had no prior

knowledge of it. It also turned out that the scope of implementing networking was much larger than first

anticipated and required more than anticipated,

which of course meant that other work had to be discarded to make room for more work on the networking. One of

the largest reasons that the networking took

time was because the two systems for the VR player and the audience respectively were different, while the API

we used favored that each client had the same

system. Because it was 2 different systems and because of other reasons, the game objects that represented the

2 perspectives were statically spawned in the

scene making it so that the “ownership” was the server rather than the respective machines that were supposed

to control the perspectives.

One challenge we faced with the project was that we didn’t have any prior experience with gesture controlls.

This meant that we had to acquire the knowledge as

part of the development process. This led to some delay before we could start implementing the controls, as we

needed to learn about the theory behind it. For the

development, one challenge with the gesture controls was to ensure that it worked for people with different

body shapes. If we tuned it for one person, it might not

work for another with broader shoulders. Similarly, even if the controls worked for a given person’s skeleton,

the person might not be detected by the camera because

of the color of their clothes or similar reasons.

Tools and platforms

Throughout the project, the centerpiece platform used for development was the Unity game engine, in which all the game logic, scripting, particle system creation, lighting and overall scenes were assembled. The choice of using Unity was made on the basis of multiple members of the group having prior experience working with it, creating a solid foundation for moving directly unto starting work on the VR implementation and other more advanced topics. Other than Unity, Maya were used for modeling the enemies and the arena, and the texturing was done in Adobe Substance 3D Painter. For these tools, the motivation for using them was also made on the basis of previous experience of the group members. For the audio, SteamAudio was used for rendering, as it seemed to give the best results when comparing it to other high fidelity audio options, and worked nicely along the HTC Vive VR headset used (which already uses steamVr). We also used “Netcode for GameObjects” from Unity to handle all our networking needs since it was inherently supported by Unity. We also believed it would be easier to use than having to implement networking by ourselves like we had done in our previous project. For the gesture controls we used Python in combination with the MediaPipe module and OpenCV.

Lesson Learned

Do not have a higher expectation that the times allow, it’s good to have sub-plans of what you can do if you

do not have enough time.

Networking takes a lot of time, especially if you have never used the particular library before. The time it

takes to implement networking also increases exponentially.

Having a VR project makes the VR capable computer a bottleneck. Try to avoid filling that bottleneck with work

unrelated to VR.

Related Work

Oudah, M., Al-Naji, A., and Chahl, J. (2020). Hand Gesture Recognition Based on Computer Vision: A Review of

Techniques.

Journal of Imaging, 6(8):73.

Gumbau, J., Chover, M. and Sbert, M. (2018). Screen Space Soft Shadows. In book: GPU Pro 360.

pp.60-74.

Valve. (2020). Half-Life: Alyx. [GAME]