ABOUT THE GAME

What is Light In The Dark?

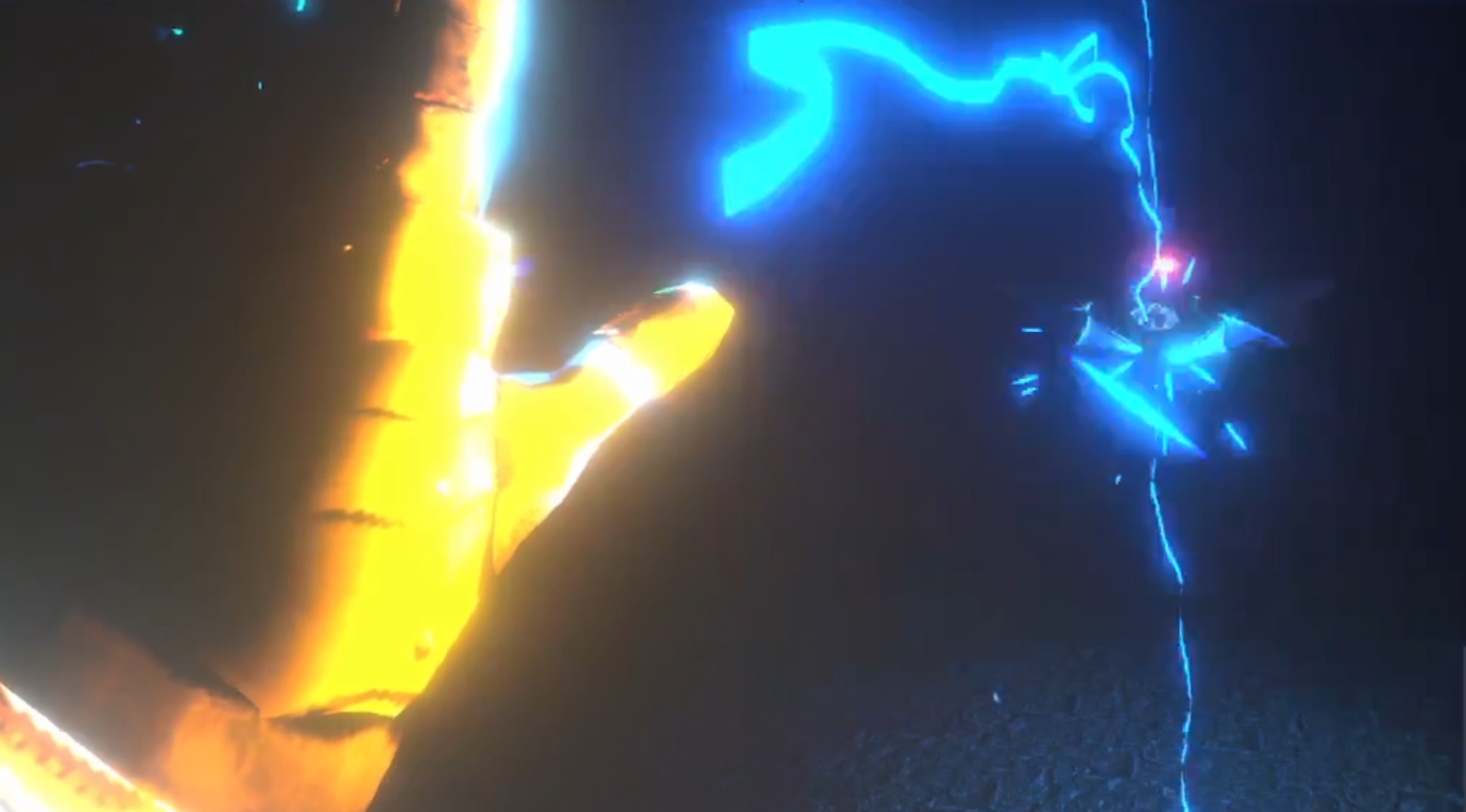

Light In The Dark is an advanced graphics and interaction project for the course DH2413 at KTH during the autumn of 2022. At its core, the game is a competitive survival experience in which one player is trying to stay alive for as long as possible while the other player tries to kill them as fast as possible. The gameplay experience of the two players is highly asymmetrical, as the player trying to stay alive is looking and interacting with a scene through a virtual reality (VR) headset while the second player is directly influencing and placing enemies into that scene by using a monitor and mouse. The scene used for the VR view of the game is set in a dark underground arena and features highly detailed textures and modeling for the surrounding environment. There are three different types of enemies, each of which has been modeled, textured and scripted by the group. Additionally, the VR scene features advanced particle systems for fire and electricity. The fire effects are used for a flaming sword used by the player as well as a chargeable fireball while the electricity particles are used to emulate a chaotic electrical ray. As the VR player is fighting for their life, the second player interacts with the scene through a custom networking implementation, allowing the actions of the second player to directly impact the VR experience.

Asymmetrical gameplay

Featuring two very distinct gameplay experiences, two players interact and compete against each other, one through an action packed hectic perspective with a VR headset and the other through a calculating and strategic perspective, interacting through a monitor and mouse.

Lighting the dark

When setting out on the project, one of the main motivations of the group was to experiment with high fidelity lightning. This also is the reason for the setting for the game being a dark mine, as the darkness emphasizes and truly brings out the light and particle effects in the scene.

Challenges

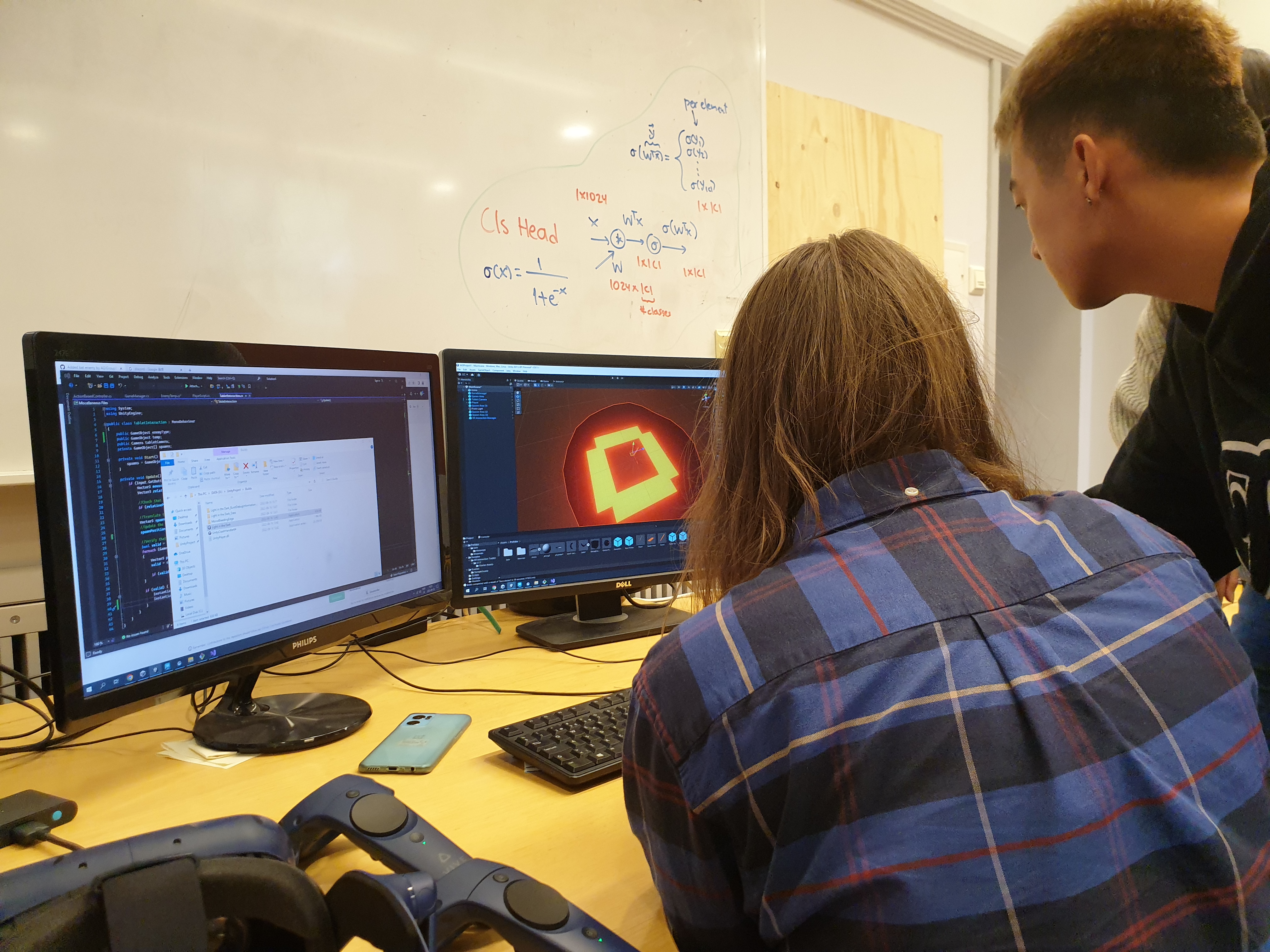

Working on the project has presented several challenges and challenges to the group. At the start of the project, none of the group members had any experience working with VR, high fidelity particle systems and advanced audio. In order to overcome these challenges, the group split up the roles to the members early, and made sure to have each member be responsible for a set of distinct features/areas using a continually updated trello board. For further communication, the group used discord and lastly, google drive was used for file sharing and documentation.

Obstacles

During the project, the group also ran into a number of obstacles. Mainly these issues arose in relation to

optimization, as the group often strived to achieve realistic-looking graphics, the performance of the game

would sometimes take a hit. One example of an area in which we encountered this was in relation to lighting in

the Unity Universal Render Pipeline, where the graphics renderer would automatically stop rendering light

sources when the number of light sources in the scene became too high (We later solved this by switching to

the ultimate quality preset in the URP and increasing the max limit).

Throughout the project, we also had occasional issues with networking.

The asynchronous nature of the two clients made it hard to use already existing network solutions such as

Unity Netcode for GameObjects. Because of this we designed and implemented our own network protocol for the

game,

which we had to revise multiple times to reduce the amount of sent data as the throughput would be too high,

overwhelming both clients and making them drop packages. The inherent asynchronous nature of networking also

caused minor issues, as both clients had to be synched to both each other, but also their respective

networking

thread, causing bugs stemming from race conditions between the game logic thread and networking thread.

Towards the end of the project, the network issues were largely resolved, but we still had occasional

connection

issues, and both users needed to restart the game to restart. The electrical arc ray also held its share of

issues.

Mainly these issues related to making it so the electric arc would behave realistically, chain between enemies

as

well as spawning smaller “child arcs” from the main arcs, without causing too much of a performance hit.

Another, more wide range obstacle which remained in the game, is that it isn't currently playable in single

player form (not counting just gawking at the graphics in the VR headset). At one point in the project, the

group had plans on making the enemies spawn from set monster spawners which would generate monsters over time,

as opposed to having the non-vr player actively summon them. By implementing this, the idea would be that the

game could potentially be played by either one or two players but we never had enough time to implement this,

as we prioritized fixing the features we already had.

Tools and platforms

Throughout the project, the centerpiece platform used for development was the Unity game engine, in which all the game logic, scripting, particle system creation, lighting and overall scenes were assembled. The choice of using Unity was made on the basis of multiple members of the group having prior experience working with it, creating a solid foundation for moving directly unto starting work on the VR implementation and other more advanced topics. Other than Unity, Maya, Zbrush and Blender were used for modeling the enemies and the arena, and the texturing was done in Adobe Substance 3D Painter and. For these tools, the motivation for using them was also made on the basis of previous experience of the group members. For the audio, SteamAudio was used for rendering, as it seemed to give the best results when comparing it to other high fidelity audio options, and worked nicely along the HTC Vive VR headset used (which already uses steamVr).

Lesson Learned

Combining different works will take time. You will also meet many bugs in this process. Thus it's good to have

enough time for merging.

It's good to have a nice structure in the project before all files start to get very messy.

It's good to think of the performance when you are creating your objects and not creating more details than

necessary.

The view with the VR headset is really different from the desktop view. Thus when you are doing graphic

programming on VR it's important to also test it on the VR headset.

Audio is additive, and so great care must be taken to adjust for multiple simultaneous sound sources to create

a

meaningful experience.

Related Work

Gawron, M., & Boryczka, U. (2018). Heterogeneous fog generated with the effect of light scattering and blur.

Journal of Applied Computer Science, 26(2), 31-43.

Cao, C., Ren, Z., Schissler, C., Manocha, D., & Zhou, K. (2016). Interactive sound propagation with

bidirectional path tracing. ACM Transactions on Graphics (TOG), 35(6), 1-11.

Lee, D. F., Huang, X. W., Chen, Y. C., Hsu, Y. X., Chang, S. Y., & Han, P. H. (2020). FoodBender: Activating

Utensil for Playing in the Immersive Game with Attachable Haptic. In SIGGRAPH Asia 2020 XR (pp. 1-2).